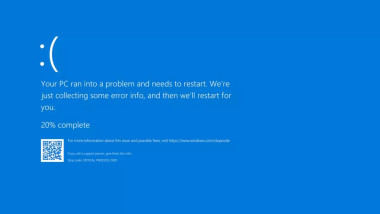

Today I'm grateful I'm using Linux - Global IT issues caused by Crowdstrike update causes BSOD on Windows

This isn’t a gloat post. In fact, I was completely oblivious to this massive outage until I tried to check my bank balance and it wouldn’t log in.

Apparently Visa Paywave, banks, some TV networks, EFTPOS, etc. have gone down. Flights have had to be cancelled as some airlines systems have also gone down. Gas stations and public transport systems inoperable. As well as numerous Windows systems and Microsoft services affected. (At least according to one of my local MSMs.)

Seems insane to me that one company’s messed up update could cause so much global disruption and so many systems gone down :/ This is exactly why centralisation of services and large corporations gobbling up smaller companies and becoming behemoth services is so dangerous.