A new tool for copyright holders can show if their work is in AI training data

Since the beginning of the generative AI boom, content creators have argued that their work has been scraped into AI models without their consent. But until now, it has been difficult to know whether specific text has actually been used in a training data set.

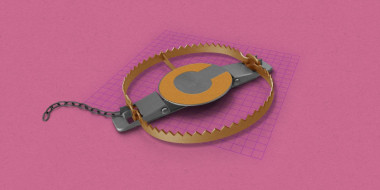

Now they have a new way to prove it: “copyright traps” developed by a team at Imperial College London, pieces of hidden text that allow writers and publishers to subtly mark their work in order to later detect whether it has been used in AI models or not. The idea is similar to traps that have been used by copyright holders throughout history—strategies like including fake locations on a map or fake words in a dictionary.

These AI copyright traps tap into one of the biggest fights in AI. A number of publishers and writers are in the middle of litigation against tech companies, claiming their intellectual property has been scraped into AI training data sets without their permission. The New York Times’ ongoing case against OpenAI is probably the most high-profile of these.

The code to generate and detect traps is currently available on GitHub, but the team also intends to build a tool that allows people to generate and insert copyright traps themselves.